A Brief History of B2B Data Sharing – and What’s In Store

Craig Lukasik has been busy! This is his third guest blog on Supply Chain View From the Field. And it’s good one! Data sharing sounds good in theory – but there is a lot more to it, as we shall see..

Supply chain partners have been using EDI, a standard electronic format, to share data and transact business for more than sixty years, and it continues to evolve. Over the decades, EDI standards emerged that cover a variety of supply chain artifacts and activities, from Purchase Orders & Acknowledgements (EDI 850 & 855) to Invoices (EDI 810) to Shipping Manifests (EDI 856).

In this blog, we look at how EDI fails to help with Supply Chain Risk Management (SCRM) because of its focus on the two-party interaction; how a model proposed by a recent paper in the International Journal of Operations & Production Management can help organizations better understand and assess the risk inherent in complex, hierarchical, international supply chains; and how data sharing innovations provide a mechanism by which supply chain partners can assess risk by leveraging real data.

IBM’s Eye on the Future of EDI

According to IBM’s 2021, “The Future of EDI: An IBM point of view” (PoV), “despite EDI’s historical success, it still leaves gaps in addressing today’s emerging digital business supply chain challenges.” The PoV proposes that “APIand EDI integration” is “the future” and cites examples where APIs (e.g. in real-time sensors, IoT, and blockchain technologies) will be able to trigger EDI messages that help ensure efficient, quality, and safe B2B transactions.

IBM’s vision for EDI describes a natural evolution of a system where, over time, new mechanisms will help to streamline the supply chain flow. This concept of the evolution of supply chain flows and how they resemble nature is outlined in Dr. Rob Handfield and Tom Linton’s new book, Flow. They propose that the velocity of the ever-improving flow of supply chain information sharing is as inevitable as water flowing down a hill carving a channel that reflects the most efficient path.

SCRM Evolution: Looking Beyond the Dyadic Relationship

The buyer/supplier transaction-oriented nature of EDI left a gap that, in the early days of the pandemic, nearly everyone witnessed.

“Finding toilet paper resembled a Hunger Games quest. Ordering a new car meant hearing the salesperson blabber on about electronics shortages causing their low inventory. I donated rain ponchos to a hospital where my cousin works so they could have protection for the staff to wear because their supplies were exhausted!”

- From my recent LinkedIn post

It is clear that even the most prestigious firms struggle with managing supply chain risk. Building APIs to supplement EDI will not necessarily help improve risk management. And APIs present their own set of challenges, as I outline here. The APIs mentioned by IBM’s PoV focus more on improving the flow of transactions than on predictive analytics that aims to mitigate supply chain risks, and the current state of data sharing in the supply chain is generally inefficient, slow, and lacking in standards. In a recent LinkedIn post by Dr. Sime Curkovic, he describes the current state of data sharing in the supply chain for analytical purposes: “the two most used technologies: email & excel (huge color coded spreadsheets)…and back and forth emails about spreadsheets.” In Flow, the authors found that “… 53% [of managers] spend more than 10 percent of their days looking for data that they need for analysis.”

Data scientists know that to build accurate machine learning models (e.g. to “forecast risks”), you need to incorporate a rich set of “features” and “parameters.” In the supply chain, the challenge is information asymmetry. Supply chain partners don’t see the same datasets and, therefore, typically cannot build accurate models due to lack of sufficient data.

In summary, using EDI or building APIs has limitations for performing advanced analytics and data science for SCRM. Regardless, a solution already exists that most data analysts around the world are skilled in: SQL. Before we explore data sharing with SQL, we’ll explore an approach that supply chain managers can use to understand the complexities of supply chain risk.

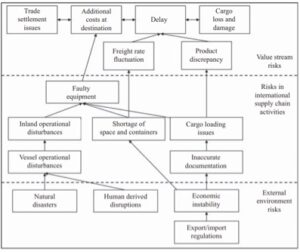

Interpretive Structural Modelling (ISM) for Supply Chain Logistics Risks and Interactions

“Risk Interaction Identification in International Supply Chain Logistics“ (Dong-Wook Kwak, et. al.; 2017), a research study in the International Journal of Operations & Production Management, proposes a model to help supply chain risk managers understand the interactions across various categories of risk. By using such a model, a firm can work with supply chain partners on data sharing strategies that allow the buyer to have finer-grained visibility into the hierarchy of supply chain risk factors. In the subsequent sections of this blog, we will explore how data sharing can be achieved in a secure and scalable manner and how “Clean Room” technology promises to provide even greater risk analytics capabilities.

The Best Analytics API: SQL

Structured Query Language, a standard, was born in the 1970s and achieved commercial success through companies such as IBM (with their DB2 implementation) and Oracle. Today, SQL is everywhere; over 50% of software developers have SQL skills. Any firm with a database (Oracle, MySQL, SQL Server, etc.) will have analysts and technologists who can interrogate data using SQL.

Data Sharing with SQL and Python

Databricks is a company known for creating Open Source technologies that drive analytics and AI at scale. Databricks founders created Apache Spark which is now an essential distributed computation/analytics engine for many tech platforms. Likewise, Databricks’ innovative Lakehouse technology ushered in a new technology platform category that has helped to relegate traditional Data Warehouse technology (such as DB2, Oracle Exadata, and Snowflake) to the “legacy” pile of enterprise technologies.

Databricks recently created an Open Source technology called “Delta Sharing”. “Delta Sharing is the industry’s first-ever open protocol, an open standard for sharing data in a secured manner”. Data in a supplier’s cloud account can be shared with third parties in a secure and massively scalable manner, without the need to send copies of data. SQL is not the only protocol with which data sharing can be accomplished. Python is one of many languages that support Delta Sharing. And static datasets are not all that can be queried. For example, using Python, a data sharing consumer can stream table updates in near real-time. This capability is a potential game-changer for supply chain analytics across partners.

The Future of Data Sharing: Clean Rooms

Data sharing with Delta Sharing is something that supply chain partners can get started with today. The technology is available now. Databricks has plans to enable next-generation data & analytics sharing: Data Clean Room technology. With this technology, analytics will be able to be performed across two or more parties’ datasets without the need to expose sensitive data elements to each other. Code and machine learning models will also be part of this Clean Room ecosystem. This technology is a natural step in the evolution of the flow of analytics for supply chain partners.